Amazon AppFlow with Salesforce in action

— AWS, Serverless, Data, Architecture — 5 min read

Amazon AppFlow is an AWS serverless solution launched in April 2020. It eases the data transfer work from major SaaS products onto AWS. In this post, I will discuss how we’ve been using AppFlow with Salesforce in a client project. I will walk through different usage patterns we have adopted. I will also quickly touch on some lessons we learned along this journey.

photo credit: apple.com/au/wallet

photo credit: apple.com/au/wallet

Background

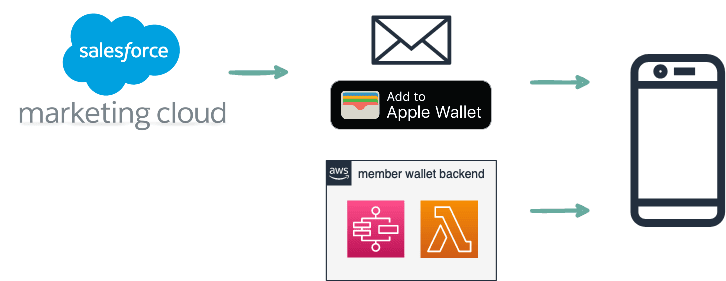

Our client is in the retail business. Last year they launched a mobile solution to bring customer membership card onto Apple Wallet and Android Wallet Pass. As a user, you can download your membership pass onto your phone and use it next time you go shopping. No more plastic cards in your wallet! Here is the solution overview:

figure 1 - membership wallet solution overview

figure 1 - membership wallet solution overview

The Challenge

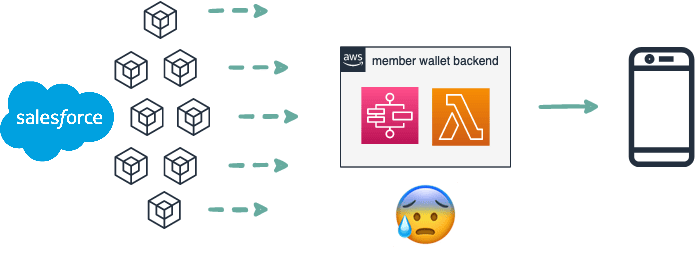

While this solution lays the groundwork, it merely shows your name and a barcode on your phone. Everything is static after you install the pass. But what about your total spending? How much more before you get an upgrade? Do you have any promotions available, or expiring? As a customer, you would expect a bit more, wouldn’t you?

Well, the overarching solution feels straightforward: as Apple/Google both support pushing new data changes to the wallet pass, all we need is to grab the data and send it to Apple/Google, easy-peasy!

However, just like your typical enterprise IT, our client manage their customer data primarily on Salesforce, with some Salesforce apps, and some custom objects and fields, and a couple of other SaaS products. Complex data models aside, there are more to be handled: data validation, data aggregation, business rules, not to mention solution availability, scalability and extensibility. It would be challenging for the existing solution to pull it off.

figure-2: complex data models and business rules

figure-2: complex data models and business rules

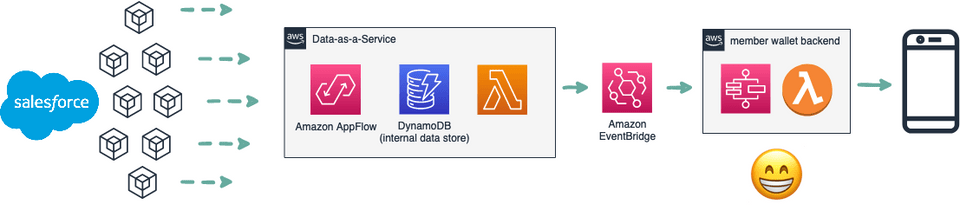

The Solution

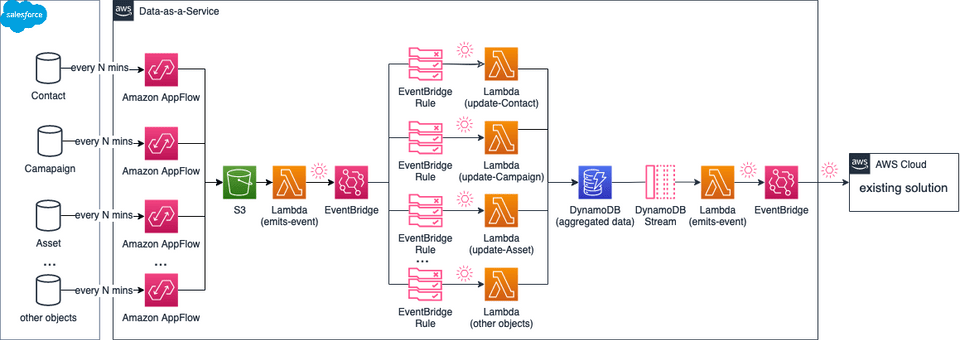

What we built is a serverless event-driven data solution sitting in between Salesforce and the current solution. It is part of the Data-as-a-Service (DaaS) platform we’ve been building for this client. This solution uses Amazon AppFlow to get data from multiple objects in Salesforce, aggregates and stores the data internally while applying a set of business rules, then sends the final data as events to the back-end of the existing solution.

figure-3: a DaaS solution leveraging Amazon AppFlow

figure-3: a DaaS solution leveraging Amazon AppFlow

Why Amazon AppFlow

While other services such as Amazon DMS can also help transfer Salesforce data, we choose Amazon AppFlow because of:

- its serverless nature: low infrastructure maintenance overhead

- its on-demand pricing model: you pay what you use

- its intuitive user interface on AWS Console

Overall, we found it easy and inexpensive for us to explore data sources and experiment with ideas during the R&D phase.

Having said that, AppFlow is still a new addition to the AWS services family. Its documentation is the bare minimum and lacks concrete examples. Even a search on Google returns limited results. Inevitably, we had to get over the learning curve, also found ourselves down the rabbit hole several times. But fear not! It is why I’m here: learning, sharing, and helping.

figure 4 - googling "AppFlow CloudFormation" returns six pages

figure 4 - googling "AppFlow CloudFormation" returns six pages

Up next, technical nitty-gritty!

AppFlow Settings Affects Usage Patterns

When setting up an AppFlow flow, you need to define 3 key factors:

- Source: where does the data come from? a Salesforce object or a change event?

- Destination: where should the data go: in an S3 bucket? or to an EventBridge bus?

- Trigger: how and how often should the flow run: a one-off run started manually, or a recurring one runs every 5 minutes, or whenever there is a data change from the data source?

These settings will define your AppFlow usage patterns. So take your time to explore all possibilities. In our solution, we use the following 3 patterns.

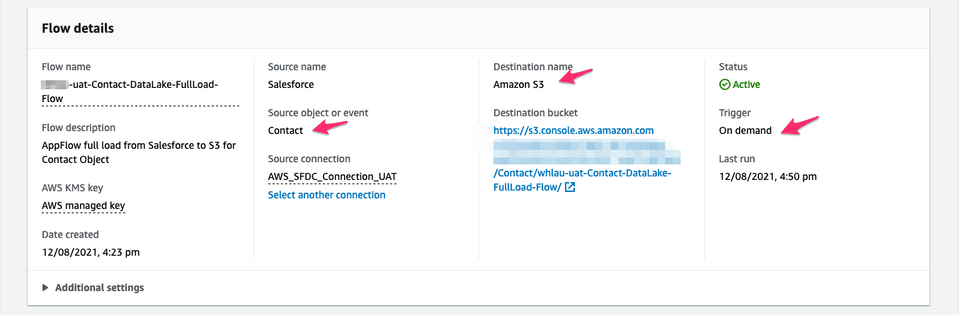

PATTERN 1 - APPFLOW RUNS ON-DEMAND

figure-5: on-demand AppFlow settings

figure-5: on-demand AppFlow settings

AppFlow configured this way needs to be executed manually. Each execution fetches a complete data set from the source. We found this pattern can help:

- verify AppFlow permissions: just because you have an AppFlow-Salesforce connection, it doesn’t mean AppFlow can grab anything from Salesforce.

- explore data objects, field, mappings, and filters: having a thorough understanding of these topics beforehand can reduce the trial-and-error effort you are likely to spend later on. observe the output**: having a preview of the data sent to S3 or EventBridge can help your plan the event consumers datastream.

- evaluate the data volume: because of its low cost, we can freely run on-demand AppFlow on the production environment to get a sense of the actual data volume. Some busy Salesforce objects such as Contact object can lead to multiple-GB of data. Your downstream solution needs to be able to handle that volume of data.

- run a one-off full-scale data transfer to S3 bucket: this can be utilised by Data Lake services such as Amazon Athena.

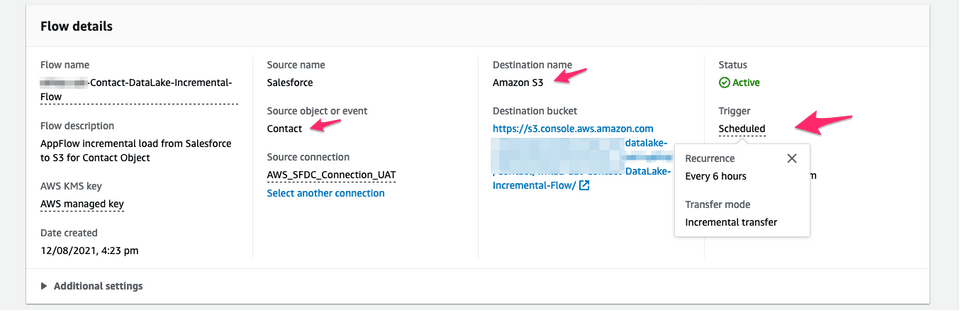

PATTERN 2 - APPFLOW TRIGGERED BY SCHEDULE

figure-6: scheduled AppFlow settings

figure-6: scheduled AppFlow settings

With scheduled setup, AppFlow flow runs periodically. AppFlow selectively transfers data objects that are a) new b) deleted c) modified.

AppFlow checks an object’s modification timestamp to decide to transfer the object or not. For Salesforce objects, the system field LastModifiedDate is normally used.

Note: AppFlow fetches ALL data fields defined in mapping table, including fields that have no changes since the last run. It is a significant difference from the next pattern.

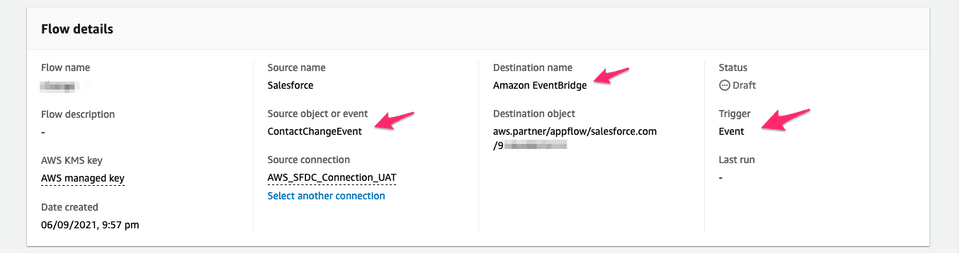

PATTERN 3 - APPFLOW TRIGGERED BY EVENT

figure-7: event-triggered AppFlow settings

figure-7: event-triggered AppFlow settings

AppFlow with this setup can send the data as EventBridge events, while Pattern 1 and 2 can only upload data as Line-delimited JSON objects in S3 buckets.

During the proof-of-concept phase, we thought Pattern 3 would be ideal because of the event-driven nature of our solution. But soon, we saw Pattern 3’s limitations. It only bring in incremental changes, meaning the output only has data fields that have changes.

In hindsight, a combination of Pattern 1 + Pattern 3 could be an alternative solution: a data baseline by Pattern 1 and following incremental changes by Pattern 3. But at the time, we felt Pattern 2 suited our needs and required less engineering effort, so it became the working horse of our solution.

Lessons Learned

Too keep this post short, I can only give you some rough ideas here. Each of these topics could be a separate blog with sample codes:

- Handle large files: as mentioned earlier, the initial AppFlow data load could be in millions of records. Processing all record might be too much for Lambda 15-mins timeout limit. We ended up with something inspired by this article Processing Large S3 Files With AWS Lambda. We could also use AWS Glue and services to handle large files, but it’s a discussion for another day.

- Consume AppFlow output in an idempotent way: the AppFlow output files/events may arrive out of time order – an earlier and larger file may land in S3 bucket after the later and smaller ones. Thanks to the LastModifiedDate timestamp field and DynamoDB conditional update, we are able to avoid overwriting things with outdated data.

- Define AppFlow in CloudFormation template: AppFlow provides an intuitive UI on AWS Console. Conversely, defining AppFlow in CloudFormation template doesn’t feel that way. This GitHub repo https://github.com/aws-samples/amazon-appflow was the most relatable example I could find. The whole experience feels clunky. Some features are available to AWS Console and CLI but missing on CloudFormation. For example, mapping all fields without having to repeatedly define projection and mapping tasks for every single field.

- Don’t forget to activate your flow: an AppFlow flow created with CloudFormation or on AWS Console is inactive by default. You have to activate the new flow manually. But we love Infrastructure-as-Code so much so we ended up with a CloudFormation Custom Resource backed by an Lambda. In essence, the Lambda function uses AWS SDK to start and stop AppFlow when the CloudFormation event happens.

Final thoughts

After getting over the initial learning curve, our engineering team has gained insight into Amazon AppFlow’s strengths and limitations. We now feel confident of making decisions on why/when/how to use AppFlow. Engineers in the team can proficiently embody AppFlow in their solutions to solve business problems.

While writing this blog, I just noticed some subtle yet helpful enhancements to the AppFlow creation process on AWS Console. How exciting it is to be one of the trailblazers on this AppFlow journey!

figure-8: high-level solution architecture

figure-8: high-level solution architecture

This blog was originally posted at https://cevo.com.au/post/amazon-appflow-with-salesforce-in-action/