A recent data migration solution design, and why an unexpected CDK behaviour cost me an hour

— AWS, CDK, S3, Glue ETL, DynamoDB, Data — 2 min read

So we had to migrate a live system from one AWS account to another. The main part of this task was to migrate several DynamoDB tables to the target AWS account. I had manually configured a working proof-of-concept in the AWS console, which was inspired by this AWS doc Using AWS Glue and Amazon DynamoDB export.

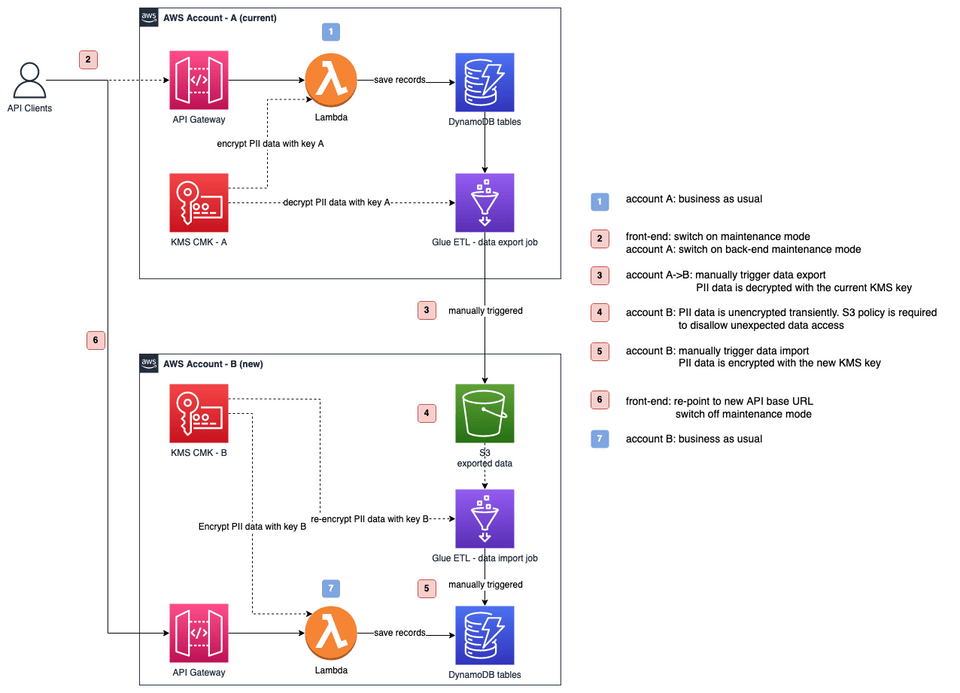

Here is the solution diagram.

The only variation from the AWS solution design is that my data exporting/importing jobs (AWS Glue ETL) have extra steps decrypting/encrypting PII data fields. These data fields were encrypted before getting stored in the DynamoDB tables, not to be confused with DynamoDB data encryption at rest.

We had these extra steps because AWS KMS keys can only be shared across AWS accounts, not transferred. After a discussion with another engineer, we felt sharing KMS key across accounts wouldn't be a viable solution for us, since the old system (and its resources) will eventually go away. Instead, we took a "decrypt then re-encrypt" approach to de-couple this dependency.

Regarding PII data security, the decrypted data is secured in transit and stored in the landing S3 bucket until the migration is completed. We applied extra S3 bucket policies to fend off unexpected data access during migration.

While all were working with my proof-of-concept, after I codified the solution with AWS CDK (an Infra-as-Code), made the deployment to the test environment, and kicked off the migration run. I found that AWS Glue couldn't crawl the data dumped in the landing S3 bucket, not to mention import the data into DynamoDB tables.

For a while, I thought the data wasn't exported correctly, so I tried to use Query with S3 Select to check what's exactly in the exported data objects, and then I found I couldn't even access the objects in the landing bucket. This led me to think and read about S3 object ACLs.

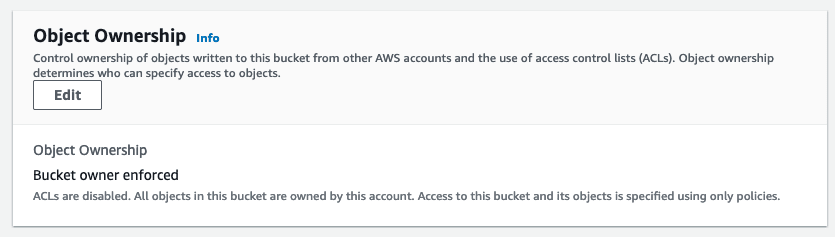

After comparing the landing bucket I manually created and the one provisioned by CDK code, I found the former S3 bucket (created manually) had

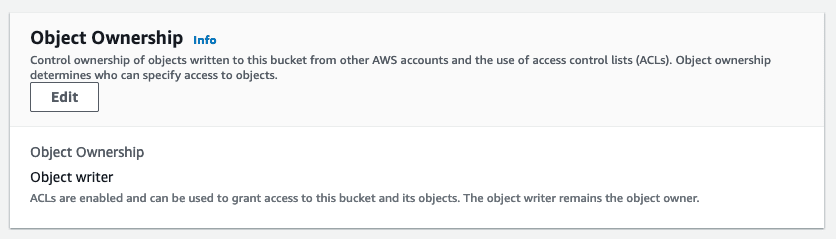

while the later S3 bucket (provisioned through CDK) had

Since the CDK documentation doesn't mention its default behaviour https://docs.aws.amazon.com/cdk/api/v1/docs/@aws-cdk_aws-s3.ObjectOwnership.html, I dig in the CDK source code a bit, and it turned out its default behaviour was what causing me trouble.

1/**2 * The objectOwnership of the bucket.3 *4 * @see https://docs.aws.amazon.com/AmazonS3/latest/dev/about-object-ownership.html5 *6 * @default - No ObjectOwnership configuration, uploading account will own the object.7 *8 */9readonly objectOwnership?: ObjectOwnership;The fix was simply sepcifying the objectOwnership property when defining the S3 bucket 🤷♂️

1const landingBucket = new Bucket(this, 'migration-landing-bucket', {2 // ...3 objectOwnership: ObjectOwnership.BUCKET_OWNER_ENFORCED,4});My reflection: AWS CDK is an abstraction layer of CloudFormation, and CloudFormation calls AWS APIs to manage resources. So does AWS Console, AWS SDK, and AWS CLI: they all call AWS APIs in the end. However, the AWS teams building these tools may have taken different approaches/principles. Resources manually created in the AWS Console tend to have a best-practice configration IMO, while defining resources with IaC really reveal the nitty-gritty under the hood.